I have been trying to find several of these so I was glad to see this list published today. I simply copied this from MSDN and cleaned it up to make it readable.

Deployment Logs

Can’t get Azure Stack to install correctly?

On the host, run Invoke-DeploymentLogCollection.ps1 from <mounted drive letter>:\AzureStackInstaller\PoCDeployment\

The logs will be collected to C:\LogCollection\<GUID>

Note: Running the script in verbose mode (with the -verbose parameter) provides details about what is being saved from each machine (localhost or VM).

For the host and for the VMs, this script collects the following data:

| Event logs (from event viewer) | “$env:SystemRoot\System32\Winevt\Logs\*” |

| Tracing directory (includes the SLB Host Agent logs) | “$env:SystemRoot\tracing\*”}, |

| Azure Stack POC Deployment Logs | “$env:ProgramData\Microsoft\AzureStack\Logs”}, |

| Azure Consistent Storage (ACS) Logs | “$env:ProgramData\Woss\wsmprovhost |

| Compute Resource Provider (CRP) Logs | “C:\CrpServices\Diagnostics”} |

Azure Stack Deployment failure logs:

On the Physical Host, these are under C:\ProgramData\Microsoft\AzureStack\Logs

Fabric Detailed logs:

C:\ProgramData\Microsoft\AzureStack\Logs\AzureStackFabricInstaller

Services/Extension logs:

C:\ProgramData\Microsoft\AzureStack\Logs\AzureStackServicesInstallerf60e6d-95c2-458e-baa5-32f3e9b6ab77

Log Collection for ACS issues

- Capture Blob Service logs

- Zip the following folder on C:\programdata\woss on the physical host machine.

- Capture Event Viewer logs on physical host machine

- Capture Admin, Debug and Operational channels event logs under “Application and Services Logs\Microsoft\AzureStack\ACS-BlobService” (use “Save All Events As” on the right pane)

- Also Capture the Application and System logs in Event viewer (Windows Logs)

- Capture blob service crash dumps [if any] on the physical machine. To find the crash dump location, use the following command.

C:\>reg query "HKLM\SOFTWARE\Microsoft\Windows\Windows ErrorReporting\LocalDumps\BlobSvc.exe" /v DumpFolder

In this TP1 release, the automatic log collection process does not include log collection for SRP. To collect the SRP service log, please follow the instruction as below. The AddUnitOfTime(value) should be changed accordingly based on when the error happens.

- Login to the xRPVM virtual machine as domain admin (AzureStack\administrator)

- Open a PowerShell window, and run the cmdlets below:

cd “C:\Porgramdata\Windows Fabric\xRPVM\Fabric\work\Applications\SrpServiceAppType_App1\SrpService.Code.10.105521000.151210.0200”

Import-Module .\WossLogCollector.ps1

$cred = get-credential

$end = get-date

$start = $end.AddMinutes(-10)

Invoke-WossLogCollector -StartTime $start -EndTime $end -Credential $cred -TargetFolderPath \\sofs.azurestack.local\share -SettingsStoreLiteralPath file:\\sofs.azurestack.local\Share\ObjectStorageService\Settings -WindowsFabricEndpoint ACSVM.AzureStack.local:19000

- Collected logs are under \\sofs.azurestack.local\share. The logs are packed as a ZIP file tagged with timestamp.

Manual Log Collection for the Multi-Compartment Network Proxy Service

Multi-Compartment Network Proxy enables the agent inside the VM to reach the metadata server and report back status.

To get proxy logs:

Check if the proxy service is running (sc query or powershell’s get-service):

Wpr –start tracer.wprp –logmode

<restart proxy service>

<repro>

Wpr –stop c:\tracefile.etl

Network Resource Provider (NRP) logs

They are not enabled by default. To gather logs in a specific situation:

- As the Service Admin, start the trace on the xRPVM:

cmd /c "logman start mysession -p {7978B48C-76E9-449D-9B7A-6EFA497480D4} -o .\etwtrace.etl -ets"

- Reproduce the error scenario

- Stop the trace:

cmd /c "logman stop mysession -ets"

cmd /c "tracerpt etwtrace.etl -y"

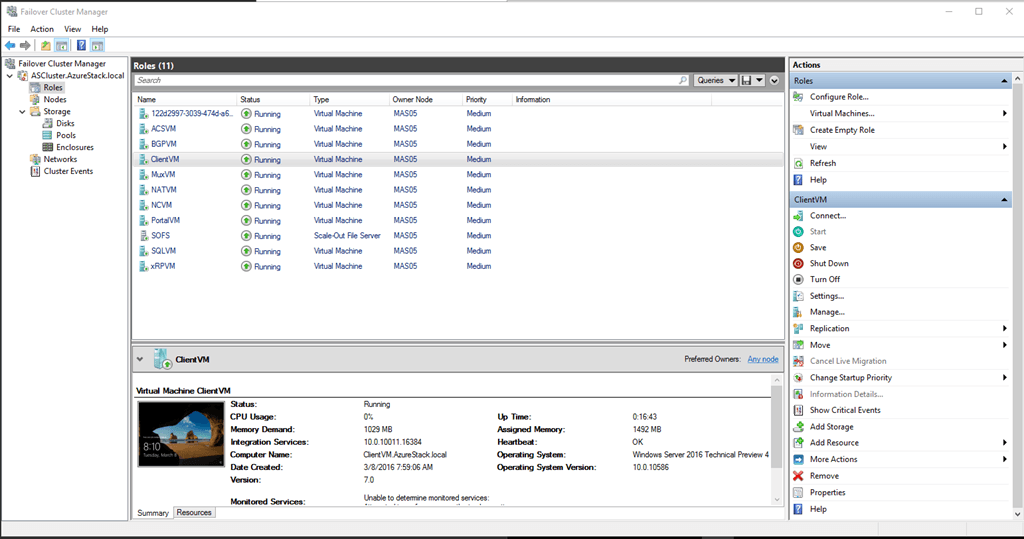

Summary/Additional Logs Locations on the Hyper-V host

| C:\ProgramData\Microsoft\AzureStack | Directory “Logs” includes Azure Stack POC deployment/TiP deployment logs |

| C:\ProgramData\WOSS | Azure Consistent Storage (ACS) Logs |

| C:\ProgramData\WossBuildout\<GUID>\Deployment | Azure Consistent Storage (ACS) deployment logs |

| C:\ProgramData\Microsoft\ServerManager\Events | File server events |

| C:\ProgramData\Windows Fabric\Log\Traces | WinFabric traces |

| C:\Windows\System32\Configuration | Desired State Configuration (DSC) files |

| C:\ClusterStorage\Volume1\Share\Certificates | Certificates |

| C:\Windows\tracing\ | Directory “SlbHostAgentLogs” includes SI Host Plugin logs |

| C:\Windows\Cluster\Reports | Cluster creation & Storage validation |

| C:\Windows\Logs\DISM\dism.log and C:\Windows\Logs\CBS\cbs.log | Role additions (e.g. Hyper-V) |

ARM Logs

On the PortalVM, Portal /ARM logs can be found in Event Viewer – Applications and Services Logs – Microsoft – AzureStack.

Note: ARM logs are in “Frontdoor”.

Control Plane Logs

In Event Viewer, under Applications and Services Logs -> Microsoft -> AzureStack. Some key logs to look at:

– Azure resource manager: Microsoft-AzureStack-Frontdoor/Operational

– Subscriptions service: Microsoft-AzureStack-Subscriptions/Operational

– Portal Shell site: Microsoft-AzureStack-ShellSite/Operational

Compute Resource Provider (CRP) diagnostics logs

On the xRPVM machine, these are located under

C:\CrpServices\Diagnostics\\AzureStack.compute.log

Note: the logs are rolled and backed up every day

Logs for Azure provisioning agent

The log produced by the Azure provisioning agent (PA) is inside a tenant virtual machine at

C:\windows\panther\wasetup.xml

Logs for VM DSC extensions

In addition to any ARM messages in the portal, these logs are currently stored in the tenant-deployed VM:

C:\Packages\Plugins

For DSC extension, the DscExtensionHandler log is in

C:\WindowsAzure\Logs\Plugins\Microsoft.Powershell.DSC\<version>\DscExtensionHandler.<index>.<date>-<time>.log

And

C:\Packages\Plugins\Microsoft.Powershell.DSC\<version>\StatusC:\Packages\Plugins\Microsoft.Powershell.DSC\<version>\Status\0.status.status

C:\WindowsAzure\Logs\WaAppAgent.log

Getting the status for VM extensions

You can also use a script such as the following one, targeting the corresponding Resource Group:

Get-AzureRmResourceGroupDeployment -ResourceGroupName $tenantRGName

$vms = Get-AzureRmVM -ResourceGroupName $tenantRGName

foreach($vmname in $vms.OSProfile.ComputerName)

{

$status = Get-AzureRmVM -ResourceGroupName $tenantRGName -Status -Name $vmname

Write-Host $status.Name

Write-Host $status.ExtensionsText

Write-Host $status.StatusesText

Write-Host $status.BootDiagnosticsText

Write-Host $status.DisksText

Write-Host $status.VMAgentText

}

Information about Resource Deployment Status:

In the Portal, under Resource Explorer/Subscriptions/<yourSubscription>/<your resource group>/Deployments/Microsoft.Template/Operations/

Examples:

Error :

“properties”: {

“provisioningState”: “Failed”,

“timestamp”: “2016-02-04T23:32:21.3841604Z”,

“duration”: “PT3.343173S”,

“trackingId”: “0af85051-1350-493c-bc95-313dd6c0a874”,

“statusCode”: “BadRequest”,

“statusMessage”: {

“error”: {

“code”: “OperationNotSupported”,

“message”: “PUT operation on resource /subscriptions/922819A8-30D6-49E5-9D15-010A66FD6B39/resourcegroups/newme1/providers/Microsoft.Network/loadBalancers/mesos-master-lb-01234567/inboundNatRules/SSH-mesos-master-01234567-0 is not supported.”,

“details”: [],

“innerError”: “Microsoft.WindowsAzure.Networking.Nrp.Frontend.Common.ValidationException: PUT operation on resource /subscriptions/922819A8-30D6-49E5-9D15-010A66FD6B39/resourcegroups/newme1/providers/Microsoft.Network/loadBalancers/mesos-master-lb-01234567/inboundNatRules/SSH-mesos-master-01234567-0 is not supported.”

},

“targetResource”: {

“id”: “/subscriptions/922819A8-30D6-49E5-9D15-010A66FD6B39/resourceGroups/newme1/providers/Microsoft.Network/loadBalancers/mesos-master-lb-01234567/inboundNatRules/SSH-mesos-master-01234567-0”,

“resourceType”: “Microsoft.Network/loadBalancers/inboundNatRules”,

“resourceName”: “mesos-master-lb-01234567/SSH-mesos-master-01234567-0”

}

Succeeded:

“properties”: {

“provisioningState”: “Succeeded”,

“timestamp”: “2016-02-04T23:32:30.9349745Z”,

“duration”: “PT6.7912649S”,

“trackingId”: “8b9a3e65-6a64-4fba-a69e-ff64631e8a92”,

“statusCode”: “Created”,

“targetResource”: {

“id”: “/subscriptions/922819A8-30D6-49E5-9D15-010A66FD6B39/resourceGroups/newme1/providers/Microsoft.Network/networkInterfaces/jb-01234567-nic”,

“resourceType”: “Microsoft.Network/networkInterfaces”,

“resourceName”: “jb-01234567-nic”

}